AWS prompt engineering course: The Free 4-Hour Course to Master Generative AI on Amazon Bedrock

Why AWS is the Next Frontier for Prompt Mastery

Let’s be real — prompt engineering isn’t just about chatting with AI anymore. It’s the language that powers entire ecosystems. The new AWS prompt engineering course on Skill Builder isn’t another “how to talk to ChatGPT” tutorial. It’s a crash course in how to build the next generation of AI experiences — the kind that actually matter in business, in design, and in the future of human-machine creativity.

And here’s the best part — it’s 100% free, official, and backed by Amazon Bedrock, AWS’s cloud platform for large language models.

You’re not just learning how to write effective prompts. You’re learning how to craft context, structure reasoning, and optimize performance using real-world AI tools trusted by the biggest companies in the world.

Who This Course Is Really For

This isn’t a “dabble for fun” kind of class. The AWS Foundations of Prompt Engineering course is designed for people ready to turn curiosity into skill — and skill into a career.

If you fall into one of these roles, you’ll feel right at home:

- Prompt Engineers who want to sharpen their techniques and understand model behavior at scale.

- Data Scientists exploring how prompt design impacts results across datasets.

- Developers and ML Engineers integrating AI into cloud products.

- Solution Architects building systems that depend on responsible, scalable AI.

It’s also beginner-friendly — just not “lazy beginner” friendly. AWS recommends a few short prep modules before diving in:

- Introduction to Generative AI – Art of the Possible

- Planning a Generative AI Project

- Amazon Bedrock: Getting Started

These set the stage so you can fully absorb how context in prompts, prompt optimization, and model tuning actually work in a live AWS environment.

Inside the 4-Hour AWS Prompt Engineering Course

This course is structured like a real-world AI workflow — not a fluffy overview. Every lesson connects back to how Amazon Bedrock handles foundation models (FMs) and LLMs, so you actually understand the “why” behind each technique.

Module 1: Understanding Foundation Models (FMs & LLMs)

It starts deep. You’ll learn what foundation models truly are — not just buzzwords. AWS breaks down how they’re trained, fine-tuned, and deployed at scale using self-supervised learning. You’ll also explore how text-to-text and text-to-image models work, and how Bedrock powers them through secure, managed APIs.

This part feels like learning the DNA of AI — the stuff that makes every model tick.

Module 2: Basic Prompt Techniques — The Core Toolkit

Here’s where your brain clicks. You’ll finally understand why some prompts work like magic and others fall flat.

AWS walks you through the building blocks of prompt engineering:

- Zero-shot prompting — asking the model to perform tasks without examples.

- Few-shot prompting — giving small examples to guide it.

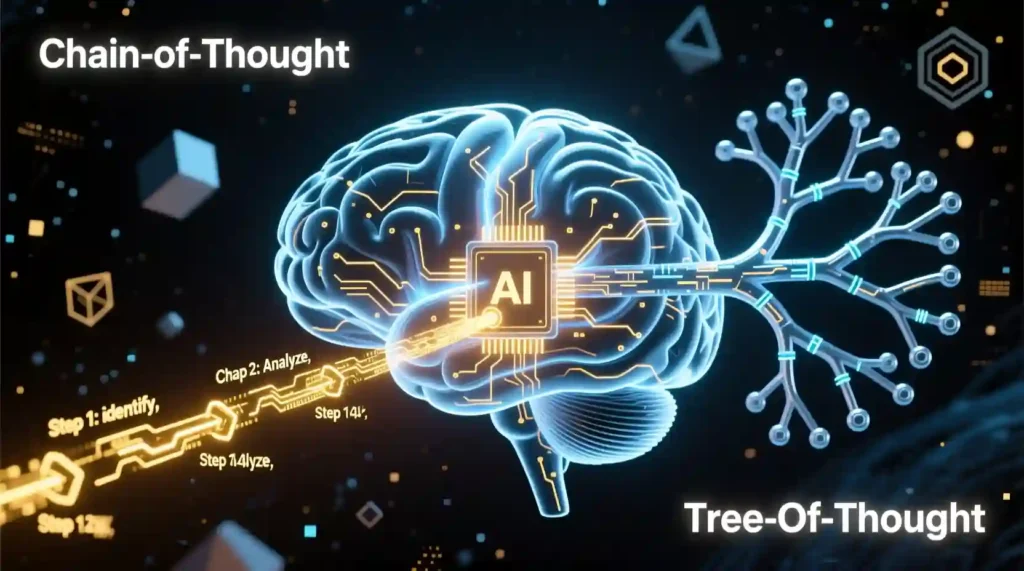

- Chain-of-thought prompting — teaching the model to reason step-by-step.

It’s not about memorizing terms — it’s about feeling how each method changes the model’s response. You start seeing the connection between effective prompts, clarity, and creative control.

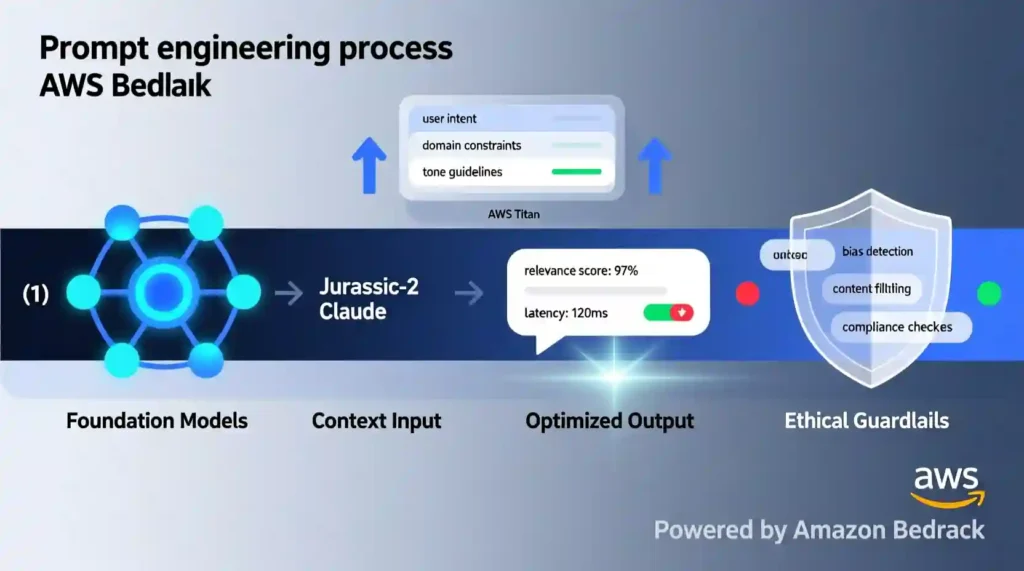

And yes, you’ll learn prompt design patterns that apply across different LLMs — from Amazon Titan to Anthropic Claude to AI21’s Jurassic-2 — all available on Bedrock.

Module 3: Advanced Prompt Engineering & Model Optimization

This is where AWS really flexes. You’ll get into advanced prompt patterns, parameter tuning, and model-specific adjustments that can make or break your output quality.

It’s not enough to write good prompts anymore — you need to optimize them.

That means learning how temperature, top_k, and top_p parameters affect creativity and coherence.

You’ll also see how tree-of-thought prompting helps manage multi-step reasoning and improve logical consistency — something that’s critical in complex business or research contexts.

Every lesson builds your confidence in crafting prompts AWS-style — meaning structured, measurable, and cloud-ready.

Ethics, Guardrails, and Bias — The Responsible Side of Prompting

AWS doesn’t just teach you to create — it teaches you to create responsibly.

In enterprise AI, that’s not optional.

You’ll explore how bias creeps into foundation models, how to design prompts that reduce it, and how to recognize when an output is unsafe or unfair.

AWS also dives into prompt misuse, showing you how to guard against prompt injection, jailbreaking, and other security risks.

This part might sound heavy, but it’s essential. If you ever plan to deploy AI in a professional setting, you’ll need these guardrails. AWS shows how to configure them directly in Bedrock.

What Makes AWS’s Approach Different

You can take prompt engineering courses anywhere. But AWS’s version is the only one that fuses real-world application, enterprise-level architecture, and ethical grounding into one course.

| Feature | AWS Foundations of Prompt Engineering | Standard Prompt Course (Coursera, Udemy) |

| Authority | Official AWS Training & Certification | Independent Instructor |

| Integration | Deep Amazon Bedrock + Titan + Claude | Usually ChatGPT or local LLMs |

| Cost | 100% Free + Free Certificate | Often paid |

| Focus | Bias mitigation, guardrails, real-world systems | Syntax, creativity, use cases |

This isn’t theory. It’s a roadmap to career-grade AI fluency — guided by AWS engineers who actually build this stuff.

Ready to Start? Here’s the Plan

If you’ve read this far, you’re already more serious about AI than most people scrolling LinkedIn quotes.

So here’s what you do next:

- Go to AWS Skill Builder.

- Search “Foundations of Prompt Engineering.”

- Sign in with your free AWS account.

- Start the 4-hour digital course and begin shaping your future with Amazon Bedrock prompt engineering.

Don’t overthink it. Just start.

The sooner you learn to speak fluently with AI, the sooner you stop being replaced by it.

The AWS prompt engineering course doesn’t treat prompts like code snippets. It treats them like conversations with a mind that doesn’t feel, but understands patterns. You learn to design those patterns — to set context, tone, and logic — so the AI becomes your creative and cognitive extension.

You stop typing random inputs. You start engineering meaning.

How Context Changes Everything

One of the biggest lessons from this AWS training is that context is the soul of every effective prompt.

A model only knows what you give it — nothing more. But when you layer details — goals, constraints, audience, format — the LLM transforms from a passive responder into an active collaborator.

AWS calls this context-driven prompting, and it’s built into every exercise in the course. You’ll practice:

- Giving structured background information.

- Framing tasks with precision (“Write as if explaining to a new developer”).

- Anchoring responses to verified data sources or style guidelines.

That’s prompt design at the professional level — not magic words, but intent translated into structure.

The Science of Prompt Optimization

Once you understand the power of context, AWS walks you into prompt optimization — refining every element to guide the LLM’s behavior.

You’ll see how simple tweaks — like specifying temperature or reordering steps — can radically shift the quality of your output.

You’ll learn to tune parameters for:

- Creativity: High temperature for brainstorming, low for accuracy.

- Focus: Using chain-of-thought prompting to keep reasoning linear.

- Reliability: Applying few-shot or tree-of-thought prompting to make the model consistent under pressure.

It’s hands-on, technical, and honestly addictive. You start feeling like an AI whisperer — adjusting the invisible dials that control how intelligence flows.

Chain-of-Thought and Tree-of-Thought: Thinking Through Prompts

The course gets deep into LLM reasoning strategies, the kind most people don’t even know exist.

- Chain-of-Thought (CoT) prompting helps models reason step-by-step. You teach them to “show their work,” improving accuracy for complex tasks like math, logic, and programming.

- Tree-of-Thought (ToT) prompting pushes that further — letting the model explore multiple reasoning paths before choosing the best one.

These aren’t just techniques. They’re prompt engineering best practices that separate amateurs from professionals.

If you’ve ever wondered how companies build tools that think before they answer, this is exactly how.

Why Amazon Bedrock Changes the Game

The reason this course stands out is because it’s grounded in Amazon Bedrock, not just theory.

You’re not learning prompts in isolation — you’re learning how to apply them in real AWS workflows using Bedrock’s foundation models:

- Amazon Titan for text generation.

- Anthropic Claude for conversational AI.

- AI21 Jurassic-2 for high-quality content and reasoning.

Each model has its quirks, and AWS teaches you how to adapt your prompt strategy for each one — a skill most prompt courses completely skip.

This means you leave the course not just with ideas, but with deployable, production-ready prompt knowledge.

Ethics and Safety: Where AWS Leads

Here’s what hit me hardest about this course — AWS doesn’t sugarcoat AI’s risks.

They show you what prompt misuse looks like:

- Jailbreaking

- Prompt injection

- Bias exploitation

Then they teach you how to build guardrails — not just in prompts, but in architecture. You learn to catch malicious inputs, filter outputs, and set ethical constraints directly inside Bedrock.

Because the truth is, responsible AI starts with responsible prompts.

Comparing AWS with Other Prompt Courses

| Feature | AWS Prompt Engineering Foundation Course | Others (Coursera, DeepLearning.AI, Udemy) |

| Integration | Deep Amazon Bedrock + Titan + Claude | Usually ChatGPT or Gemini only |

| Depth | Focuses on model behavior and reasoning | Focuses on syntax and examples |

| Certification | Free AWS-verified certificate | Paid or unofficial certificates |

| Ethics Focus | Includes bias, guardrails, prompt safety | Often skipped entirely |

| Outcome | Cloud-ready AI deployment skills | Basic text prompt skills |

AWS built this course for people who want to go beyond “AI user” status — and become AI engineers.

Who Should Take This Course (and Who Shouldn’t)

Take this course if you:

✅ Want to work in AI development, data science, or cloud computing.

✅ Already love experimenting with LLMs and want to turn it into a real skill.

✅ Are curious about how context, logic, and creativity merge inside an AI system.

Skip it if you’re just here to make memes or cheat your essays. This isn’t that.

My Final Take: Why You Shouldn’t Sleep on AWS Skill Builder

When you finish the AWS prompt engineering course, you’ll realize something simple but powerful:

The future belongs to those who speak AI fluently — not just use it.

This course teaches you that fluency.

You learn how to craft context, guide reasoning, design structure, and deploy prompts that perform under pressure.

It’s free. It’s certified. And it’s built by the people who literally run the cloud that powers half the internet.

So yeah, skip another productivity app.

Take this course.

Learn prompt patterns, LLM strategies, and Amazon Bedrock prompt engineering — because in 2025 and beyond, the real engineers are the ones who can talk to machines with purpose.

👉 Start Now: Go to AWS Skill Builder, search “Foundations of Prompt Engineering”, and begin your 4-hour journey.

It’s time to stop guessing prompts — and start engineering intelligence.

Pingback: FREE Microsoft AI for Beginners (12-Week LLM & NN Course) - zadaaitools.com