H1: How to Use Runway AI for Video Generation: The Ultimate Guide (Part 1)

The Rise of AI Filmmaking

Imagine describing a movie scene in words — and watching it come alive in cinematic detail within minutes. That’s not a futuristic dream anymore; that’s what Runway AI for video generation makes possible today.

Runway has quickly become the go-to platform for creators, marketers, and filmmakers who want to generate videos using only text prompts, reference images, or short clips. Instead of spending days on animation or costly production, you can visualize stories, pitch concepts, and even produce short films using AI — all from your browser.

This blog is your ultimate hands-on guide to using Runway AI for video generation, from setting up your first project to fine-tuning visuals with professional-grade control. Whether you’re a YouTuber experimenting with cinematic shorts, a filmmaker storyboarding a pitch, or a content creator building branded reels — this 3-part guide will walk you through everything step-by-step.

What Is Runway AI and Why Use It

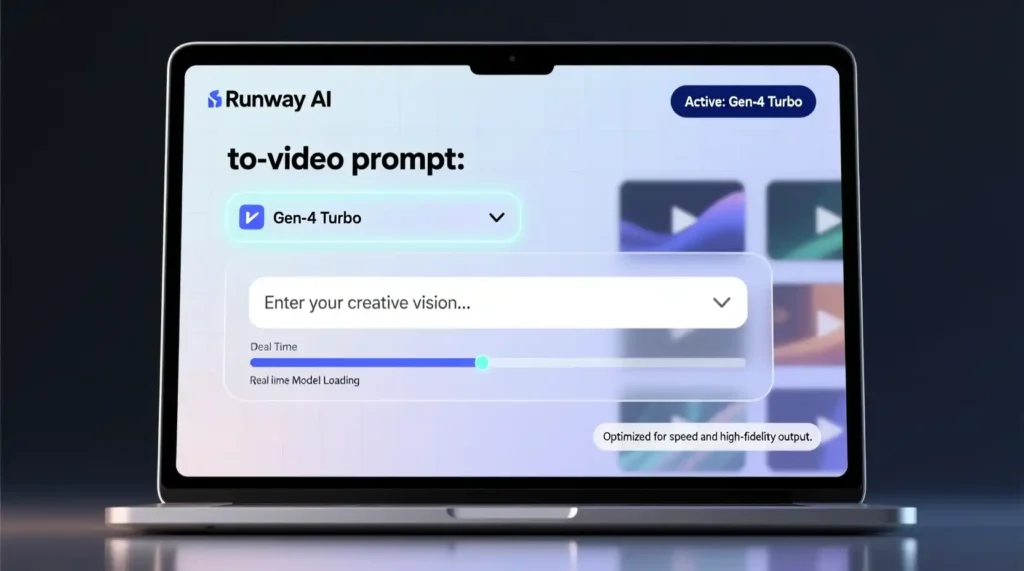

Runway AI is a creative AI platform built to generate images and cinematic videos from natural language prompts, images, or video clips. Using advanced models like Gen-3 and Gen-4 Turbo, Runway transforms ideas into motion — no cameras, actors, or expensive setups needed.

Unlike traditional editing or animation tools, Runway runs entirely in the cloud, letting you work from any browser with intuitive controls. The platform’s mission is simple: to make storytelling faster, smarter, and accessible to everyone.

Key reasons creators love Runway:

- Cinematic quality: The latest models produce realistic motion, depth, and camera effects.

- Creative flexibility: Turn text, sketches, or photos into fully animated scenes.

- No technical learning curve: Anyone can create high-quality videos without mastering editing software.

- AI-assisted filmmaking: Visualize scripts, create mood boards, or prototype ideas before filming.

From film studios to solo creators, Runway has become the AI equivalent of a film production team — enabling full pre-production visualization, animation, and video experimentation on demand.

How to Use Runway AI for Video Generation: Step-by-Step Tutorial

Let’s dive into the practical side. Here’s exactly how you can start generating your first cinematic clip using Runway AI.

Step 1: Start a New Project

- Go to runwayml.com and sign up or log in.

- On your dashboard, click “Generative Session” to begin a new creation.

- Choose your preferred model:

- Gen-3 Alpha: Fast, creative, ideal for artistic motion.

- Gen-4 Turbo: Highest realism, precise motion, cinematic results.

The interface is clean and beginner-friendly — you’ll see options to upload reference material, write prompts, and adjust video settings.

Pro tip: Use Gen-4 Turbo if your goal is realistic character movement or cinematic storytelling. Gen-3 works better for conceptual or artistic visuals.

Step 2: Choose Input Mode

Runway gives you two main ways to start:

- Text to Video: Perfect for generating a scene from scratch using only words.

- Image to Video: Best for controlling the visual base — upload an image (character, landscape, frame) and let AI animate it based on your motion prompt.

Most creators prefer Image + Text combination — it gives you both visual consistency and motion control.

Example:

Upload a misty forest photo and prompt:

“A knight walks through a foggy battlefield, cinematic tracking shot from behind, dramatic lighting.”

This approach tells Runway what to move, how the camera behaves, and what mood to maintain.

Step 3: Write a Clear and Detailed Prompt

Your prompt is the director’s note for the AI. The more descriptive and cinematic your prompt, the better the output.

Think of your prompt like writing for a cinematographer — mention:

- Camera movements: pan, tilt, zoom, dolly, tracking.

- Character actions: walking, turning, running, reacting.

- Environment: lighting, weather, style.

- Mood or genre: sci-fi, fantasy, noir, documentary.

Example prompt:

“A lone astronaut stands on a red desert planet at sunset. The camera slowly zooms in from a distance, showing dust particles in the air and the glow of a nearby spaceship.”

This level of detail gives Runway context to produce cohesive and cinematic motion.

💬 Pro tip: Keep the prompt under 100 words but rich in visual cues. Avoid vague terms like “cool video” or “beautiful scene” — be specific about what’s in frame.

Step 4: Adjust Settings for Visual Control

Runway lets you fine-tune every key detail before generating your clip.

Here’s how to optimize:

- Aspect Ratio: Choose the format that matches your purpose

- 16:9 → YouTube / film

- 1:1 → Instagram posts

- 9:16 → Reels / TikTok

- Resolution:

- Basic: 720p

- Pro: 1080p or 2K

- Premium: Upscaling to 4K (Gen-4 only)

- Clip Length:

- Default is 4–10 seconds; longer clips consume more credits.

- Motion Controls:

Adjust motion intensity, camera movement type, and prompt weight (how strongly AI follows your prompt).

Advanced tweak: Reduce motion intensity if your clip looks chaotic; increase it for dynamic movement like tracking or drone-style shots.

Part 2: How to Use Runway AI for Video Generation – From Prompt to Polished Clip

Bringing Your Vision to Life: Generate and Refine with Runway AI

Now that you’ve set up your scene, written your prompt, and fine-tuned your settings, it’s time to bring your video to life.

This is where Runway AI for video generation truly shines — turning words and static images into full cinematic motion, complete with camera dynamics, depth, and emotion.

Step 5: Generate the Video

Once your prompt and parameters are ready, hit the “Generate” button.

Runway’s servers process your inputs and render the clip — typically in under a few minutes, depending on:

- Model (Gen-3 vs Gen-4)

- Resolution & length

- Complexity of your motion and scene

While you wait, you can track progress or queue multiple generations for comparison.

Quick Tips During Generation

- Use “Multiple Generations” mode to explore variations of the same prompt.

- Try slight prompt edits like “camera rotates around the knight” or “dust particles illuminated by headlights” — subtle tweaks yield dramatic visual differences.

- Save your favorite seed if you plan to generate consistent looks later.

When rendering completes, you can preview the clip right in your browser — smooth, cinematic, and ready for iteration.

Step 6: Iterate and Edit Like a Pro

One of Runway’s biggest strengths is that it doesn’t stop at generation. It gives you a full suite of AI editing tools to refine your clip further.

Here’s what you can do next:

1. Restyle

Transform your generated video into different artistic modes — anime, sketch, vaporwave, or cinematic noir. This is especially powerful for creators who want brand consistency or thematic variety.

Example:

Turn your fantasy battlefield into a cel-shaded anime style or a film noir version with dramatic lighting.

2. Expand

If your scene feels cropped or short, use Expand to outpaint the borders — extending your shot beyond its initial frame while keeping the style intact.

3. Adjust Video

Fine-tune your output with:

- Trim (cut out unwanted parts)

- Speed (slow-mo or hyperlapse effects)

- Shake (simulate handheld shots)

- Reverse (add artistic effects or creative looping)

These adjustments are essential for keeping your AI videos professional and visually coherent.

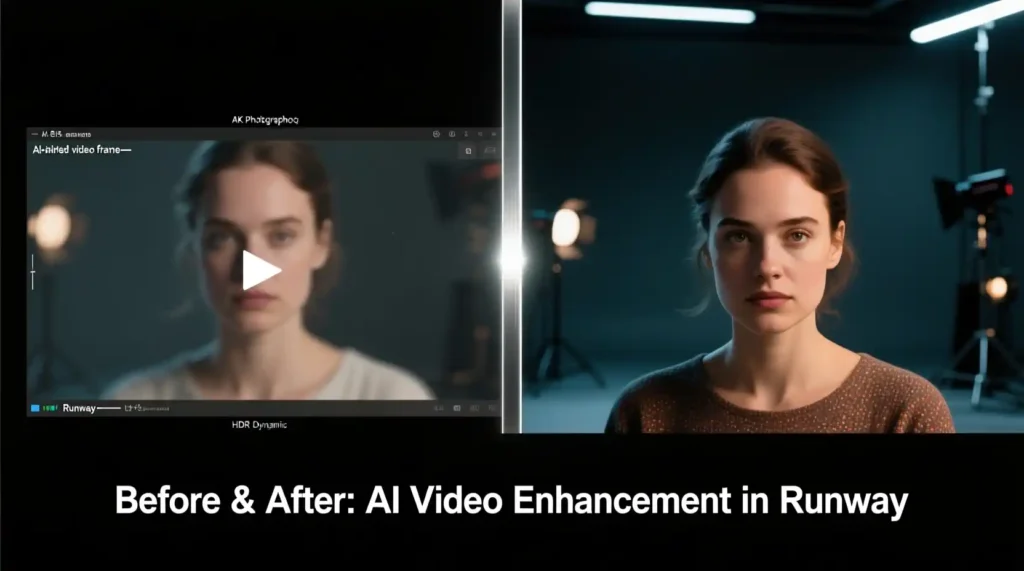

4. Lip Sync & 4K Upscaling

- Add AI-driven Lip Sync if your characters are talking or performing dialogue scenes.

- Use Upscale to 4K to achieve crisp, broadcast-ready quality — a must for commercial or cinematic use.

All these editing options are non-destructive, meaning you can re-edit or re-render without losing prior versions.

Real Examples and Case Studies

AI filmmaking isn’t just a novelty — it’s transforming the creative process across industries. Here are real-world examples of how professionals are using Runway AI for video generation to reshape storytelling:

Case Study 1: Pre-Production Visualization

Filmmaker: Elena Martinez

Project: “Echoes in the Void”

Elena used Runway’s Gen-4 model to visualize her film’s storyboards before production began. By generating cinematic scenes for key moments, she pitched the film to producers with a visually compelling AI storyboard — helping secure funding months ahead of schedule.

“Runway allowed me to show the emotion and tone of the film, not just describe it. It made investors feel the story before it was shot.” — Elena Martinez

Case Study 2: Production Pre-Visualization

Director: Marcus Hale

Project: Commercial Pre-Vis for a Car Brand

Marcus used Runway AI for pre-visualizing dynamic camera shots that would later be replicated with real vehicles. This helped the cinematography team plan lighting, motion paths, and drone angles with precision.

“The AI version gave us a visual test run. It saved us 2–3 days of setup time on location.” — Marcus Hale

Case Study 3: Post-Production Enhancement

Creator: David Chen

Project: AI Short Film – “Half Life”

David built his entire short film using Runway’s text-to-video generation, blending cinematic realism with surreal effects. He later refined the clips using Runway’s Restyle and Expand features, then added sound in post-production using traditional tools.

“It’s not replacing filmmakers — it’s empowering them. I could tell a story that looked like a $50,000 production for under $200.” — David Chen

Pro Tips and Advanced Features

To take your AI video generation from good to mind-blowing, here are insider tips from power users and AI artists:

- Combine Reference + Text:

Always use both. The image anchors your visual tone; the text defines motion. Gen-4 handles this hybrid input beautifully. - Experiment with Motion Brush:

A hidden gem — it lets you paint areas of the frame that should move. Perfect for animating only certain objects (like hair, flags, or clouds). - Use “Prompt Weight” Smartly:

If your output looks too different from your input image, increase prompt weight. If it’s too rigid, lower it for more creative flow. - Style Consistency with Fixed Seeds:

Fixing the random seed number keeps characters and lighting consistent across multiple clips — ideal for multi-shot storytelling or music videos. - Export Silent Video & Add Sound in Post:

Runway videos are silent by default. Import them into software like Descript, CapCut, or DaVinci Resolve to sync soundtracks, dialogue, or ambient effects. - Leverage Styles:

Use built-in style presets (anime, cinematic, vaporwave, claymation, photorealistic) to match your brand tone or storytelling theme. - Iterate Creatively, Not Repetitively:

Each generation should test a new cinematic angle, lighting, or movement. Don’t just regenerate the same prompt — evolve it.

Common Mistakes to Avoid

Even with powerful tools, some common missteps can derail your results.

Here’s what to watch out for when using Runway AI for video generation:

- Too-Vague Prompts: “A cool cinematic scene” won’t cut it — give specific direction.

- Skipping Reference Images: Without a base image, character and style consistency can fail.

- Ignoring Aspect Ratios: If your project is for Instagram Reels, generate vertical (9:16) — not widescreen.

- Low Resolution Exports: Don’t settle for 720p unless it’s a draft. For production, aim for 1080p+ or upscale.

- Over-Iterating Without Tweaks: Regenerating the same input without adjusting prompts leads to diminishing creativity.

Real-World Impact

What makes Runway AI for video generation revolutionary isn’t just the speed or ease — it’s the democratization of creativity.

In the past, cinematic storytelling required budgets, crews, and post-production time. Now, creators can visualize, test, and publish film-quality clips within a few hours.

Whether you’re a solo YouTuber, indie filmmaker, or marketing team — Runway levels the playing field between imagination and execution.

Part 3: How to Use Runway AI for Video Generation – Pricing, FAQs & Why It’s a Game-Changer

Runway AI Pricing and Plan Overview

Like most advanced AI platforms, Runway AI for video generation runs on a credit-based system combined with subscription tiers.

This flexible setup means you only pay for the power you use — perfect for both casual creators and professionals.

Here’s a quick breakdown:

| Plan | Cost (Monthly) | Credits | Resolution Limit | Best For |

| Free Trial | $0 | Limited | 720p | Exploring Runway’s core features |

| Standard | ~$12 | ~625 credits | 1080p | Hobbyists, content creators |

| Pro | ~$28 | ~2,250 credits | 2K | Filmmakers, agencies |

| Unlimited / Enterprise | Custom | On request | 4K | Studios, production houses |

How Credits Work:

Each video generation, upscale, or restyle uses a certain number of credits. The cost depends on the clip length, resolution, and model type (Gen-3 vs Gen-4 Turbo). Pro plans also offer faster rendering priority and access to higher-quality outputs.

Runway AI vs. Alternatives: Honest Comparison

AI video generation is a growing field — and while Runway AI dominates in professional-grade realism, several other platforms offer unique strengths. Here’s how it compares:

| Platform | Pricing Model | Main Features | Pros | Cons |

| Runway AI | Subscription + credits | Text-to-video, image-to-video, motion brush, 4K upscaling | Best cinematic control, filmmaking focus | Silent outputs, pay-per-use |

| Pika Labs | Free & Paid | Quick AI video generation | Fast results, good for casual use | Limited realism and motion depth |

| Kaiber | Free & Paid | Text/image to video, style-rich animations | Stylish results, user-friendly | Less suited for narrative or film visuals |

| Stable Video Diffusion | Open Source | Research-based AI video | Free, customizable, developer-friendly | Requires coding knowledge, not for beginners |

If your goal is cinematic storytelling, realistic motion, or professional concept visualization, Runway leads the field.

If you’re experimenting casually or creating stylized short content, tools like Kaiber or Pika Labs can complement your workflow.

Use Cases: When Runway AI Makes the Most Sense

Runway AI for video generation isn’t just a novelty—it’s becoming an essential creative partner across multiple industries:

1. Filmmaking & Storyboarding

Directors use Runway to pre-visualize scenes and test camera angles before shooting. It saves time and money during pre-production.

2. Marketing & Advertising

Brands use Runway-generated videos to storyboard campaigns, produce quick concept visuals, or even create AI-driven commercials without renting equipment.

3. Education & Research

Educators and researchers use AI-generated video for storytelling, training materials, and creative expression.

4. Independent Creators & YouTubers

Solo creators can produce short films, music videos, or visual essays using just prompts and images — skipping the need for expensive cameras or studios.

5. Game & Concept Design

Runway helps designers create motion reference clips for worlds, characters, and cutscene previsualization.

Why Runway’s Gen-3 and Gen-4 Models Matter

Runway’s evolution from Gen-1 to Gen-4 marks one of the most impressive leaps in AI video realism.

- Gen-3:

- Great for quick generation and stylized visuals.

- Faster rendering.

- Ideal for concept design or artistic motion.

- Gen-4 (Turbo):

- Enhanced realism, smoother motion.

- More accurate camera control and lighting.

- Better character consistency.

- Ideal for professional filmmakers and branded content.

Pro Tip: Use Gen-4 with both text and image inputs for the most coherent results. Text defines motion, while images set style and detail.

FAQ: Everything You Need to Know About Runway AI for Video Generation

1. Do I need editing experience to use Runway AI?

No! Runway is designed for all skill levels. Its intuitive interface allows even beginners to generate and refine cinematic clips without any editing background.

2. Can I use Runway for commercial projects?

Yes. As long as you follow Runway’s licensing terms, you can use your generated videos for marketing, branding, or even film distribution.

3. Does Runway support sound or voiceover?

Currently, videos generated by Runway are silent. However, you can easily add background music, voiceovers, or effects in post-production using tools like Descript, CapCut, or Premiere Pro.

4. Can I edit or extend my generated videos?

Absolutely. You can trim, restyle, expand, and upscale clips directly inside Runway or re-import them for further edits.

5. What’s the key difference between Gen-3 and Gen-4?

Gen-3 focuses on speed and artistic diversity, while Gen-4 emphasizes realistic camera movement, motion physics, and visual fidelity — perfect for filmic scenes.

6. Is Runway available on mobile?

Runway is primarily browser-based and optimized for desktop/laptop use. Mobile support is limited, but exports can be shared or viewed on mobile devices.

7. How do credits work exactly?

Each action — video generation, upscale, or restyle — consumes credits. Your monthly subscription refills credits automatically, and extra credits can be purchased anytime.

8. Can Runway replace real video production?

Not entirely. While it accelerates pre-production and visual development, live shoots still offer nuance that AI can’t yet replicate. However, it can drastically reduce concept visualization costs.

9. Does Runway offer collaboration features?

Yes, Runway supports team workspaces, allowing multiple creators to collaborate on a project and review outputs together — perfect for studios and agencies.

Why Runway AI Is a Must-Have Creative Tool

We’re at the dawn of an AI filmmaking revolution — and Runway AI for video generation sits at the heart of it.

Here’s why creators, brands, and filmmakers can’t ignore it anymore:

- Fast Visual Storytelling:

Create cinematic scenes in minutes, not weeks. - Creative Independence:

No need for full production teams — just your imagination and prompts. - Cost Efficiency:

Professional-grade visuals without studio rentals or post-production costs. - Scalable Quality:

From 720p concepts to 4K upscaled films, Runway supports projects at every level. - Accessible Innovation:

Browser-based and intuitive — anyone can direct their own short film.

Final Thoughts: The New Frontier of Visual Creation

In an era where creativity meets computation, Runway AI for video generation bridges the gap between imagination and execution.

Whether you’re building a sci-fi teaser, animating your script, or visualizing brand content — Runway gives you the tools to bring cinematic dreams to life.

It’s not about replacing filmmakers; it’s about empowering them.

With Gen-4’s realism, flexible pricing, and evolving AI motion control, Runway stands as the most complete platform for AI-powered storytelling in 2025.

So if you’ve ever imagined a scene, a world, or a story — now’s the time to bring it to life.

Head to runwayml.com start your first project, and experience how Runway AI for video generation transforms imagination into motion.

The future of filmmaking is not coming — it’s already here.

Pingback: Samsung Perplexity TV AI vs Alexa - zadaaitools.com