Master Hugging Face NLP and LLM – Fine-Tuning, Reasoning & Deployment

Where Machines Start to Speak Like Us

There’s a moment when you run your first text-generation model in Hugging Face and watch a string of random words turn into a full sentence — and your brain just stops.

You realize something powerful: this isn’t science fiction anymore. It’s language intelligence being shaped by your own hands.

That’s what Hugging Face NLP and LLM is all about — taking the mystery of Transformers, tokenization, and fine-tuning, and turning it into something you can actually use. No ivory-tower theory, no robotic slides. Just code, emotion, and creation.

This course doesn’t just show you how to work with Large Language Models (LLMs). It teaches you how to speak their language, how to listen when they hallucinate, and how to make them work for you — ethically, powerfully, responsibly.

Let’s break it down.

Part 1 – Foundations of Hugging Face NLP and LLM

Before you dive into building your own AI assistant, summarizer, or emotion detector, you need to understand how these language models think.

This isn’t a copy-paste journey. This is where you and the machine start to align.

Step 0 – The Setup: Your Playground of Possibility

It starts simple: you set up Python, install Hugging Face Transformers, PyTorch (or TensorFlow, if that’s your vibe), and create your free Hugging Face Hub account.

You’ll configure your GPU or cloud environment. The process feels like setting up a little lab in your bedroom — cables, code, caffeine, and curiosity.

This is your creative space, and once it’s ready, you’re unstoppable.

Step 1 – Transformers and the Birth of Modern NLP

Here’s where the history hits.

The world moved from bag-of-words to embeddings to the magic of Transformers — a shift so massive it changed AI forever.

You’ll learn what makes BERT, GPT, and T5 different — how some models read, others write, and some translate thought itself. You’ll see why attention isn’t just a word; it’s the heartbeat of modern Hugging Face NLP and LLM systems.

You start feeling the rhythm of it — tokens flowing, attention aligning, the machine learning what to focus on. It’s weirdly human.

Step 2 – How Transformers Actually Work

You’ll peel back the architecture:

Encoders (BERT-style) handle understanding tasks — classification, NER, emotion detection.

Decoders (GPT-style) handle generation — conversation, stories, answers.

And encoder-decoder hybrids (like T5) do both.

Inside, you meet self-attention — the mechanism that lets every word look at every other word and decide what matters. It’s not just math; it’s perception, empathy, logic. It’s how machines start making sense of us.

Step 3 – Model Inference with Pipelines

Now you get your hands dirty. You’ll use the famous pipeline() API to load pre-trained models for tasks like:

- Sentiment analysis

- Summarization

- Translation

- Text generation

It’s shockingly simple: five lines of code and suddenly your computer can summarize a paragraph or finish your sentence.

You’ll move models to GPU, explore logits, and see how the neural machinery transforms probabilities into thoughts.

It’s addictive.

Step 4 – Bias, Ethics, and Human Shadows in Language

This is where everything slows down.

Because when you give machines our language, you give them our flaws too. Bias. Prejudice. Misinformation.

You’ll study how LLMs mirror human bias, how they sometimes amplify it, and how developers can train or fine-tune responsibly.

There’s no moral filter built in — it’s something you, the builder, must bring to the code.

This part is heavy, but it’s essential. Because understanding what could go wrong is what makes your creations trustworthy.

Module 2 – The Hugging Face Ecosystem: Where Everything Connects

Once you’ve built your foundation, you’ll explore the Hugging Face ecosystem — a network of tools designed to make deep learning faster, cleaner, and surprisingly collaborative.

You’re not just a coder anymore. You’re part of a global movement.

Step 5 – Tokenization: Breaking Down the World into Words

You can’t feed raw text into an LLM. It has to be tokenized.

This module is where you’ll learn how to break sentences into units of meaning — tokens — using techniques like WordPiece, BPE, and SentencePiece.

You’ll learn about padding, truncation, and special tokens like [CLS], [SEP], and [PAD]. You’ll even train your own tokenizer and save it like a secret recipe.

It’s the most technical kind of poetry — watching language become data and then become meaning again.

Step 6 – The 🤗 Datasets Library: The Fuel of Every Model

Data is the soul of NLP.

You’ll load and explore thousands of open-source datasets from the Hugging Face Hub, learning how to preprocess, map, filter, and tokenize them efficiently.

You’ll work with CSVs, JSONs, and massive text corpora like Common Crawl. The datasets library is pure magic — it lets you handle massive data pipelines without breaking your RAM or your spirit.

You’ll start thinking like a curator, not just a coder.

Step 7 – Accelerate: Training at Lightning Speed

This is where scale meets simplicity.

With 🤗 Accelerate, you’ll learn to train across multiple GPUs or even nodes — without rewriting your training loop.

You’ll experiment with mixed-precision training (FP16) for faster performance.

It’s the moment you realize that the same code running on your laptop can scale to supercomputers.

That’s the Hugging Face way — democratizing access to power.

Step 8 – Collaborating on the Hugging Face Hub

AI isn’t a solo sport.

You’ll create your own model repositories, upload checkpoints, write Model Cards, and version your work using Git.

This is how you join the Hugging Face community — not as a spectator, but as a creator.

You’ll see your model live on the web, share it with others, and maybe — just maybe — someone across the world will use your model to build something new.

That’s when it hits: you’re not just learning NLP and LLMs. You’re helping shape the future of language itself.

Hands-On Fine-Tuning and Classical NLP Tasks

This is where you get real. Models stop being abstract and start becoming personal.

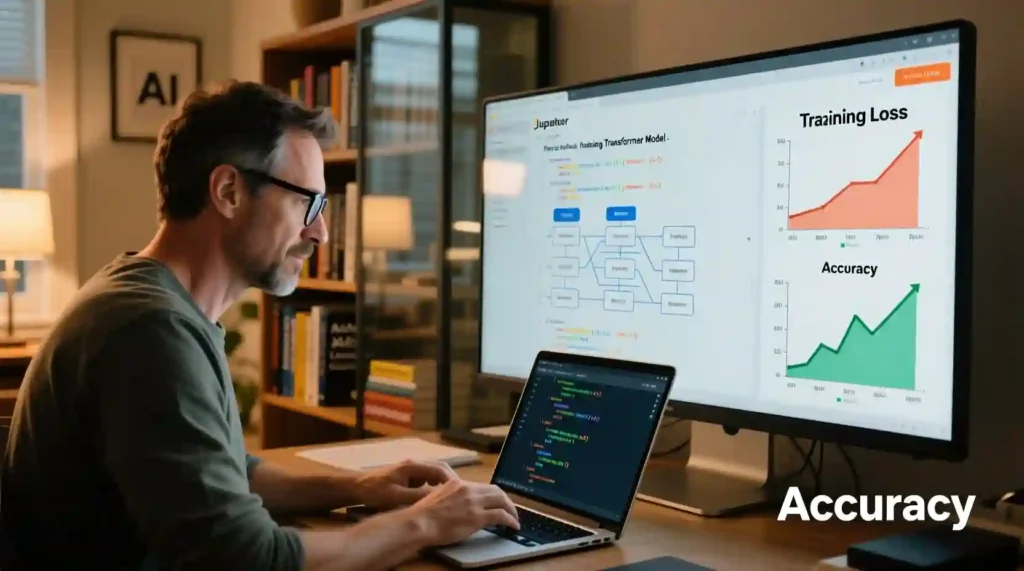

Step 9 – Fine-Tuning Pretrained Models

You’ll begin by taking a pretrained Transformer — maybe distilBERT, BART, or T5 — and fine-tuning it for a task that matters to you.

Sentiment analysis for mental-health tweets. Product review classification. A chatbot that actually listens.

The Trainer API makes it smooth:

You define training arguments, callbacks, and log metrics on Weights & Biases or TensorBoard.

You’ll watch your model’s loss drop like a heartbeat stabilizing.

That’s when it clicks — fine-tuning is more than code. It’s alignment.

Step 10 – Classical NLP Tasks That Still Matter

Named Entity Recognition (NER).

Part-of-Speech tagging.

Emotion detection.

These are the unsung heroes of the NLP world — the foundation that lets bigger models think clearly.

You’ll train token classifiers and learn how small tasks build the grammar of intelligence.

Each token becomes a clue, a tiny neuron in a growing brain.

Step 11 – Sequence-to-Sequence Magic

Then comes the transformation.

You’ll work with BART, T5, or MarianMT to summarize long texts, translate between languages, or even rephrase messy input into clarity.

It’s not just NLP — it’s art. Watching your model rewrite in your own tone feels intimate, like watching language breathe.

You’ll explore abstractive summarization, machine translation, and custom collators that handle every weird dataset you throw at it.

You’ll stop fearing the complexity and start dancing with it.

Step 12 – Question Answering & Masked Language Modeling

This step feels like teaching your model how to reason.

You’ll build extractive Q&A systems on SQuAD and generative ones with GPT-style models.

Masked language modeling (MLM) becomes your secret weapon — training your model to fill in missing pieces of thought.

Each token prediction feels like a neuron waking up.

And somewhere between the loss functions and gradients, you’ll realize: this isn’t about machines understanding us. It’s about us learning to teach understanding.

Module 4 – Advanced LLM Engineering & Real-World Deployment

This is where Hugging Face NLP and LLM becomes alive in the world.

Not on your local notebook. Not in a Kaggle kernel. But in real apps, used by real people.

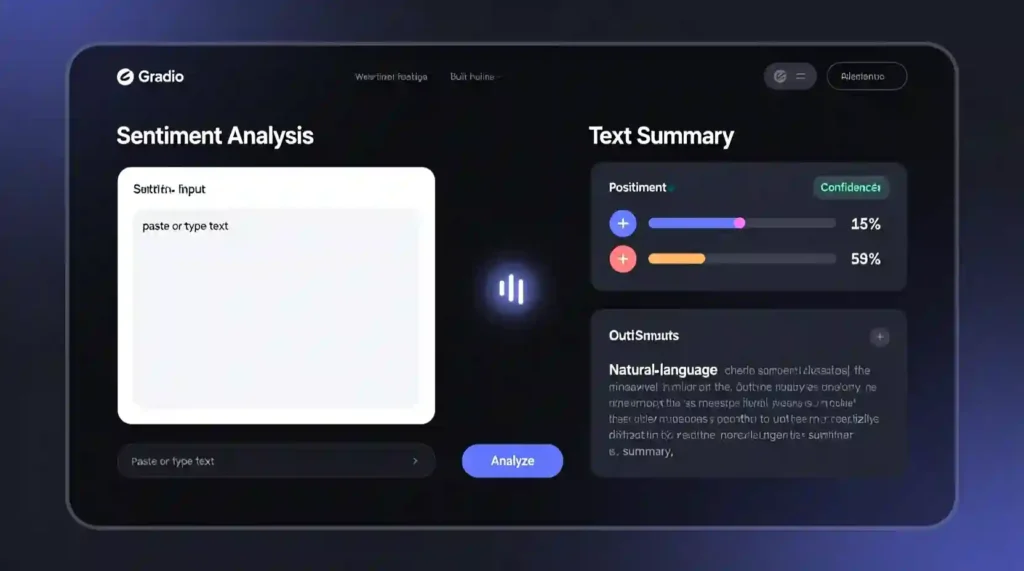

Step 13 – Building Interactive Demos That Wow

You’ll bring your models to life using Gradio or Streamlit, crafting simple web UIs that anyone can use.

Maybe you build a sentiment-analyzing diary.

Or a summarizer for long YouTube transcripts.

Or a translation tool that sounds human.

Then you deploy it as a Hugging Face Space — one click, live demo, no server headache.

You’ll see comments from strangers using your work.

And it’ll hit you — this is how AI spreads: through creation, not corporations.

Step 14 – Parameter-Efficient Fine-Tuning (PEFT)

Here’s where you learn to push limits with LoRA and PEFT — the techniques that make fine-tuning giant models affordable.

You’ll freeze 99% of model weights and tweak just a few trainable adapters.

You’ll train a billion-parameter model on your laptop and feel like you just hacked physics.

This is power without waste — the future of efficient AI.

Step 15 – Training Language Models from Scratch

You’ll build a Causal Language Model (CLM) from zero — define vocab, tokenizers, and the full training loop.

You’ll feed it custom data: your blog posts, your thoughts, your style.

And soon, it’ll start echoing you.

That’s when it becomes personal — an LLM that sounds like your voice, trained by your words.

It’s a strange, humbling experience — realizing you’ve created something that mirrors your inner dialogue.

Step 16 – Building Advanced Reasoning Models

Finally, the mind-expanding part: reasoning.

You’ll experiment with Chain-of-Thought (CoT) prompting and reasoning datasets that help models solve logic tasks step-by-step.

It’s not just “predict the next word” anymore.

It’s think before you answer.

You’ll see how reasoning turns prediction into understanding — and understanding into something that feels like thought.

The Hugging Face Ethos – Community, Openness, Humanity

Every tool you’ve used — 🤗 Datasets, 🤗 Accelerate, Gradio, PEFT — exists because of a community that believes AI should be open, kind, and human-centered.

You’ll realize you’re part of a global rhythm: researchers, artists, devs, dreamers — all building together.

Every model card, every dataset, every space is a shared language of creation.

That’s what makes Hugging Face NLP and LLM more than a course.

It’s a movement.

Why This Journey Changes How You See AI Forever

After finishing, you won’t look at chatbots or summarizers the same way.

You’ll see the care behind each layer, each token, each bias corrected by a human touch.

You’ll know how to:

✅ Fine-tune and deploy real NLP models

✅ Build reasoning and generative systems

✅ Use LoRA and PEFT for scalable training

✅ Create demos that people actually use

✅ Share your models and join the Hugging Face Hub community

And more importantly — you’ll feel like you belong in this world of builders who care about both performance and ethics.

Final Thoughts – Language, Humanity, and You

When I finished this journey, I didn’t just understand LLMs — I understood myself better.

Because teaching a machine to understand human words means first confronting your own complexity.

That’s the quiet truth behind Hugging Face NLP and LLM — it’s not about replacing humanity. It’s about amplifying it.

So go fine-tune that model.

Deploy it. Share it.

Let your voice echo through the circuits.

Because in the end, the future of AI isn’t about machines learning from us — it’s about us learning how to build with heart.

💥 Call to Action

If you’ve ever dreamed of building language intelligence that feels human — this is your path.

Start learning Hugging Face NLP and LLM today at https://huggingface.co/learn.

It’s free, open, and waiting for you to bring your words to life.

Pingback: Claude AI vs Gemini AI: Ultimate 2025 Comparison - zadaaitools.com